Although transactional databases are still the most popular data stores, data professionals mostly interface with analytical data stores like BigQuery, Snowflake, and Databricks. Depending on who you ask, these technologies are called data lakes, data warehouses, or data lakehouses. While these software categories have a lot in common, they also differ in how they store data and process it. To make things more complicated, when you observe product roadmaps and releases, one could argue that the tools that were once positioned in just one of these categories are rapidly converging.

In this article, you'll take a look at each data store type from a functional perspective and review some use cases where each type shines. Instead of focusing on the technical properties of each solution, this guide prioritizes the business and organizational impact of choosing one or the other.

Differentiating between data lakes, warehouses, and lakehouses

To establish an overview of the three types of data stores, let's start with the most flexible: the data lake.

Data lakes

A data lake infrastructure usually consists of two essential components:

- The data repository is where vast amounts of data in raw format are stored. This data can be structured (typically CSV files), semistructured (typically JSON, Parquet, or Avro files), or unstructured (flat text files or even images and videos). This versatility offers extreme flexibility, is infinitely scalable, and is very cost-effective, as it doesn't require specialized storage and needs very little maintenance. Cloud storage, such as Amazon S3 and Google Cloud Storage, is an excellent choice.

- The processing engine is the second component of a data lake setup. Tools like Apache Spark, Presto, and Trino can process huge volumes of data across clusters of machines at record speeds. They support SQL-like languages to make the data available to a wide range of users but also offer APIs and connectors (like Python) that can be integrated into data engineering or data science workloads.

One of the major strengths of data lakes is that they're very flexible and capable of handling an ever-changing environment. Extra tables or extra fields have no impact on how the data is stored or processed.

Data warehouses

In the early days of computing, before cloud resources were readily available, data warehouses were organized as "OLAP cubes." They were the go-to tool for storing data in a way that was both memory-efficient and optimized for common analytical tasks like filtering and aggregating. Data warehouses were like a collection of nested arrays, carefully organized for easy access and manipulation.

Despite the somewhat misleading name "cubes," these tools could handle far more than three dimensions. In fact, around the turn of the millennium, OLAP cubes were essential for organizations looking to leverage their operational data for strategic decision-making.

However, with the rise of cloud computing and no hardware limitations, data warehouses were organized around a new paradigm: massively parallel processing. By decoupling storage and processing capabilities (below the hood), cloud computing enables infinite scaling across clusters of machines, even on a per-query basis. Additionally, unlike their transactional counterparts, data warehouses store data in columns, not rows. These properties are what drive a data warehouse's performance.

On the other hand, data warehouses are tailored to structured data and work best with predefined data models. Because of this more narrow scope, data warehouses are optimized for read operations in business intelligence and descriptive analytics. Although modern data warehouses have their storage and processing engine decoupled, they're offered as a single product or as a suite of proprietary tools, unlike data lake infrastructure.

Due to their rigid nature, data warehouses are the workhorse of most dashboards, automated reports, and ad hoc analytics environments. But this rigidity comes at a price; preparing data for consumption takes a lot of manpower and fragile data pipelines in which a single schema change can easily break a dozen downstream dependencies.

Data lakehouses

While data warehouses and data lakes sit at opposite ends of the flexibility spectrum, data lake houses cover the whole spectrum.

Databricks's release of the Delta Lake table format was the harbinger of an explosion of data lakehouse tools and vendors. Today, the three most popular data lakehouse table formats are Delta Lake, Hudi, and Iceberg. They each offer an extra abstraction layer and a metastore on top of data files (like Parquet). This enables processing engines to process hundreds of files as a single (structured) table. When data lakes get ACID properties, that's when you end up with a data lakehouse.

By offering both access to the underlying data files and the metastore, data lakehouses serve all types of users, from BI developers to AI engineers. Nevertheless, this hybrid approach comes with a certain amount of complexity. Although many lakehouse vendors have reduced the learning curve, it does take some effort and team procedure to keep the metastore and the underlying files synchronized. For example, when a table is dropped, should the underlying files also be deleted, or should they keep existing as a file? There's no single answer to that question.

Additionally, vendors within the analytical data store category are rapidly converging their products to a data lakehouse. For example, BigQuery now natively supports semistructured data and comes in a lakehouse flavor known as BigLake. In contrast, Snowflake has endorsed the Iceberg table format. Both vendors also support cross-cloud querying of all popular data files and lakehouse table formats.

It's also worth noting that even different table formats are converging on features like time travel, data constraints, schema evolution, indexing, and generated columns.

Use cases for data lakes, warehouses, and lakehouses

Which data storage type you'll choose will depend on your use case. Let's look at which data professionals tend to use each storage type and for what, as well as the types of companies that typically use each.

When to use a data lake

Data lakes are almost exclusively associated with data science and machine learning (ML) roles, and for good reason. Analysts like structured, well-documented data and mappable real-world entities (such as products, clients, and transactions), and a data lake doesn't offer any of that. In contrast, data scientists spend most of their time exploring data, looking for new features to add to their model, and experimenting with it. Often, they work with plain text that needs to be preprocessed, such as in natural language processing (NLP).

Data lakes are the data source for ML systems, supporting both algorithm development (the training phase) and MLOps practices like automatic model retraining when data or model drift occurs (the operational phase). When an organization prioritizes a data lake over a warehouse or lakehouse, it's usually because ML products, like curation recommendations and tools, are central to the business.

For example, app or web analytics often have objects stored as semistructured data, with each type of event having a different set of properties. You could create structured tables for every type of event after exploring all the available fields. However, a data scientist is typically more interested in discovering new signals in the available data, tucked away somewhere as a nested property.

Large companies with hundreds of algorithms and dedicated data teams typically use data lakehouses to ensure consistent data interpretation, while AI startups often use data lakes without formal governance.

When to use a data warehouse

Thirty years ago, data warehouses were mostly used by data analysts working in Excel with OLAP cubes. Although the practices changed drastically, the users did not.

Since data warehouses offer a structured overview of the data, often mappable to real-world entities, they're mostly used by data analysts who need to make comprehensible reports, dashboards, and ad hoc analyses for business users. They're typically assisted by data governance tools and data catalogs to help them perform their tasks.

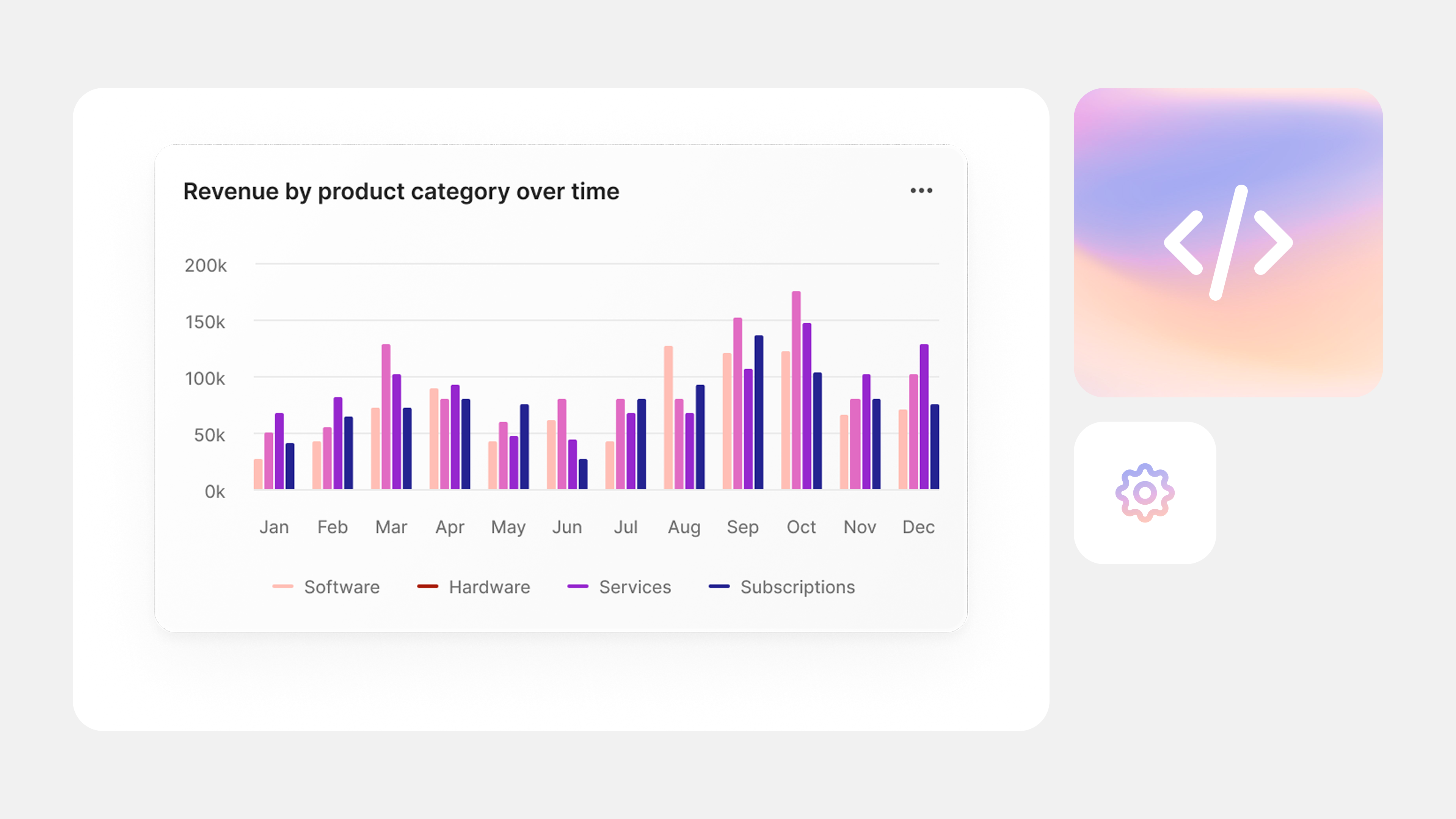

The main task of data warehouses is to offer an easily interpretable single source of truth. When business users are looking to find answers to business questions, data warehouses (often with the assistance of data analysts) are an excellent choice. When the tables are properly modeled and documented, questions like "What product category generates the most revenue?" and "Which sales channel has customers with the highest churn rate?" should only take a couple of hours, if not minutes, to answer.

Data warehouses are important for any company that wants to get involved with business intelligence. However, given the scale of efforts that are often required to come to high-quality data, small companies usually choose to focus on just a couple of data sources (like product sales). Huge companies without any interest in ML could also select a data warehouse over a data lakehouse.

When to use a data lakehouse

As you might suspect by now, a data lakehouse caters to the needs of all data professionals. Data analysts typically query the data through the metastore, which is a structured representation of the underlying data files. This structured representation is usually the result of an analytics engineer's work. Data scientists, depending on the type of data they're using, might directly access the underlying Parquet files. The same goes for ML engineers who set up automated AI systems that retrain themselves when necessary.

A data lakehouse combines the strengths of data warehouses and lakes, enabling data scientists to create features from unstructured data while easily accessing structured data via the metastore. Data analysts or BI engineers who want to visualize the monthly sales number per region can connect their analytics or dashboarding tool to generate insights in minutes.

Companies that use data lakehouses can cater to the needs of all their data professionals. For example, sales data can be used to make a recommendation engine (data scientists) or for building sales rep dashboards (data analysts). Additionally, data lakehouses can help prevent lock-in. That's because most lakehouse table formats are open source and can easily be transferred to another cloud environment or be queried with a different engine. However, these advantages require extra governance to ensure the metastore tables remain in sync with the files.

Don't leave your data ungoverned, undocumented, and unbrowsable

Whether you're using a data warehouse or a lakehouse, you don't want to leave your data ungoverned, undocumented, and unbrowsable. That's where Secoda comes in. Secoda is your data's command center. Need to onboard a new user? One interface to rule data access. Looking for the definition of a column? Secoda's data catalog has you covered. Woke up at 5 a.m. because of a data quality issue? Must be a Secoda alert.

Here's how Secoda can help:

- Data governance: Easily establish who owns what data, data lineage, and access controls.

- Data discovery: Explore data sets, query metadata, and discover tables you didn't know existed.

- Data quality: Ensure high-quality data and consistency.

- Data monitoring: Improve trust in your data by monitoring your data pipelines and creating data quality metrics. This way, data teams are notified immediately if something breaks.

This article outlined the differences between data lakes, warehouses, and lakehouses, highlighting their technical distinctions and their unique roles and use cases.

Data lakes are great for data scientists at startups with ML use cases, while data warehouses are the workhorse of the BI needs of most organizations. Data lakehouses can cater to the needs of most data professionals but require more complex governance.

If you're looking for a solution to document and govern your data, try Secoda. Your business users will thank you for correct, consistent dashboards.