Over the past decade, both storage and computing power have become significantly more accessible through the cloud. With vendors like Snowflake, BigQuery, and Redshift offering near-infinite scalability, powerful compute, and pay-as-you-go pricing, the business case is obvious. For example, for less than 1/1000 of a dollar a month, you can archive a gigabyte of data in Google's Cloud Storage.

As a result, many organizations stopped investing in their own server infrastructure and instead moved the architectures supporting their operational and analytical data needs to one of several cloud vendors. This has become a critical step for data-driven organizations looking to scale.

But getting to the cloud isn’t always straightforward. Legacy tools, undocumented pipelines, and fragile configurations can make it hard to even understand what you’re migrating. There’s also the risk of exposing sensitive data mid-transfer, or picking the wrong platform and ending up locked into costly, underperforming infrastructure. It’s no surprise many teams get stuck in analysis paralysis or overspend on consultants before they’ve even written a migration plan.

Whether you’re consolidating legacy systems, modernizing for the first time, or integrating newly acquired data sources, migrations are complex and often high-stakes. Done wrong, they can lead to broken dashboards, lost trust, or weeks of firefighting. Done right, they unlock better performance, increased discoverability, and long-term cost efficiency.

At companies like Vanta and Fullscript, Secoda has helped data teams navigate complex migrations by providing a single place to track lineage, monitor data quality, and document their data stack in real time.

In this article, you'll learn why teams migrate their data warehouses to the cloud, what best practices to follow, and how Secoda can help you manage the entire process with confidence.

Factors that shape your migration strategy

Not all migrations follow the same path. The right approach depends on several variables:

- Volume and variety of data: Some industries, like finance or healthcare, naturally generate more data from a wide range of sources. This often leads to more complex pipelines and longer migration timelines. In these cases, teams may need to run legacy and modern systems in parallel until the transition is complete.

- Operational criticality: When data powers day-to-day operations, there is little room for downtime. These migrations often require a cautious, phased rollout. For less critical analytical data, teams may opt to restructure and optimize their pipelines as they migrate.

- Time constraints: If your current technology is reaching end-of-life or poses security risks, speed becomes a top priority. A lift-and-shift approach, which moves data without major rework, can help meet tight deadlines while minimizing disruption.

- Technology compatibility: Migrating between similar technologies (like on-prem MySQL to cloud MySQL) is relatively simple. But moving from transactional to analytical systems, or across data models, often requires deeper transformation and refactoring.

Understanding these factors early helps you scope your migration more accurately and choose the right level of complexity for your goals.

Why migrate your data warehouse to the Cloud

Scalability

Cloud data warehouses support elastic scaling and pay-as-you-go pricing models. Compute and storage resources can scale independently, meaning your infrastructure can flex up during peak usage and shrink down during quieter periods. It allows for cost savings during periods of low demand and the flexibility to scale up during peak times.

Most providers also offer a suite of frictionless integrations with analytics, machine learning, and data sharing tools, so your data stack can grow as your business needs evolve.

Accessibility

Centralized identity management and role-based access controls in a cloud suite are often more convenient than managing access across a variety of on-premise tools. Users can access data securely without VPNs or complex networking, enabling remote collaboration and improving time to value. Many cloud data warehouses facilitate real-time data sharing and collaboration so that teams can work together more effectively regardless of their physical location.

Cost efficiency

Cloud data warehouses offer a level of performance that on-prem systems simply can’t match and a big part of that comes down to how they separate storage from compute and use massively parallel processing (MPP).

With traditional infrastructure, storage and compute are tightly coupled. That setup is great for high-transaction systems that rely on ACID guarantees, but it isn’t ideal when flexibility and cost control matter more than strict consistency. In the cloud, storage and compute can scale independently, making it easier to handle spikes in data volume or processing without rebalancing your entire system. If a compute node fails, another one can take over without putting your data at risk.

Cloud warehouses also rely on MPP—a computing approach that uses many processors to handle tasks simultaneously. This allows systems to crunch massive datasets faster than traditional sequential processing.

Combined, these innovations support on-demand usage and pricing models. Instead of paying to keep a server running 24/7, you only pay for the resources you actually use. BigQuery, for example, is serverless and charges by query, with pricing based on how many "slots" your query consumes. That means no idle infrastructure and a far more efficient cost structure.

Key steps to a typical migration

While no two migrations are the same, most follow a common sequence of steps. Here's what a typical cloud data warehouse migration looks like:

Step 1: Audit your current environment

Inventory all data sources, pipelines, dashboards, and dependencies. Identify what’s actively used, what’s redundant, and what needs to be refactored. Leverage usage analytics to understand to help speed up this process.

Step 2: Define your goals and success metrics

Clarify what you want out of the migration, whether that’s cost savings, faster performance, better governance, or something else. Align stakeholders on what success looks like.

Step 3: Choose your architecture and tooling

Select your cloud warehouse (e.g. Snowflake, BigQuery), transformation tools (e.g. dbt, Dataform), and ingestion solutions (e.g. Fivetran, Airbyte). Decide whether to take a lift-and-shift, re-platform, or hybrid approach.

Step 4: Map dependencies and plan your migration

Use lineage to understand how data flows through your systems. Break the migration into phases and decide which pipelines, models, or dashboards to migrate first.

Step 5: Migrate and validate your data

Move data into the new environment, rebuild necessary transformations, and use monitoring to ensure data completeness, freshness, and accuracy.

Step 6: Document as you go

Preserve knowledge by documenting tables, models, and pipelines throughout the migration. Use automation to scale this step and reduce manual effort.

Step 7: Run systems in parallel and compare results

Validate that the new environment matches the old. Check row counts, performance benchmarks, and user adoption before deprecating the legacy setup.

Step 8: Cut over, monitor, and improve

Once confident, switch traffic to the new warehouse. Continue to monitor usage, track performance, and optimize based on real-world usage patterns.

Why migrations are hard and how Secoda helps

Despite the benefits, migrations come with risk. Legacy pipelines, undocumented dependencies, and inconsistent data ownership make it hard to plan and even harder to execute. Secoda addresses these challenges by giving data teams visibility and control across every stage of the migration.

Understanding dependencies with lineage

Before you migrate, you need to know what depends on what. Secoda automatically maps table- and column-level lineage across your warehouse, transformation layers like dbt, and downstream BI tools.

This visibility is especially important when untangling legacy systems or merging datasets from multiple environments. With lineage, you can see where data originates, how it moves through systems, and how it gets transformed - all in one place. That makes it easier to:

- Identify downstream dependencies before changing or deprecating a source

- Understand the broader impact of changes on dashboards, models, and pipelines

- Scope the migration effort more accurately

- Prevent outages by catching breaks before they happen

- Meet regulatory requirements for traceability in sensitive environments

In industries with strict compliance standards, lineage isn't just a nice-to-have. It's critical for tracking data flow and auditability.

Prioritizing what matters

Migrating every single dashboard and dataset is rarely worth it. Secoda surfaces usage metrics so you can focus on assets that actually drive business value.

Monitoring data quality during migration

Maintaining data integrity is one of the most important parts of any migration. It’s important to ensure that what lands in the new warehouse is accurate, complete, and reliable.

Secoda offers a range of built-in monitors to help teams catch and resolve quality issues during and after migration. These monitors cover tables, columns, pipelines, and even warehouse-specific metrics:

- Tables: Row count, freshness

- Columns: Cardinality, null percentage, uniqueness, min/max/mean

- Pipelines: Job duration, success rate, error rate

- Warehouse usage (Snowflake): Cost, query volume, compute credits, storage usage

- Custom SQL: For defining tailored monitors specific to your needs

Each monitor is configurable and can alert you when values fall outside expected thresholds, helping you proactively flag anomalies and validate that data is flowing as expected.

This kind of oversight is especially important when your migration spans multiple systems, has overlapping pipelines, or affects downstream reporting. Having structured checks in place reduces risk and increases trust in the final result.

Automate documentation to reduce friction

It’s important to maintain the context around the data that you are moving during a migration. Secoda functions as a central hub for metadata and documentation, helping teams preserve knowledge, enforce consistency, and streamline onboarding.

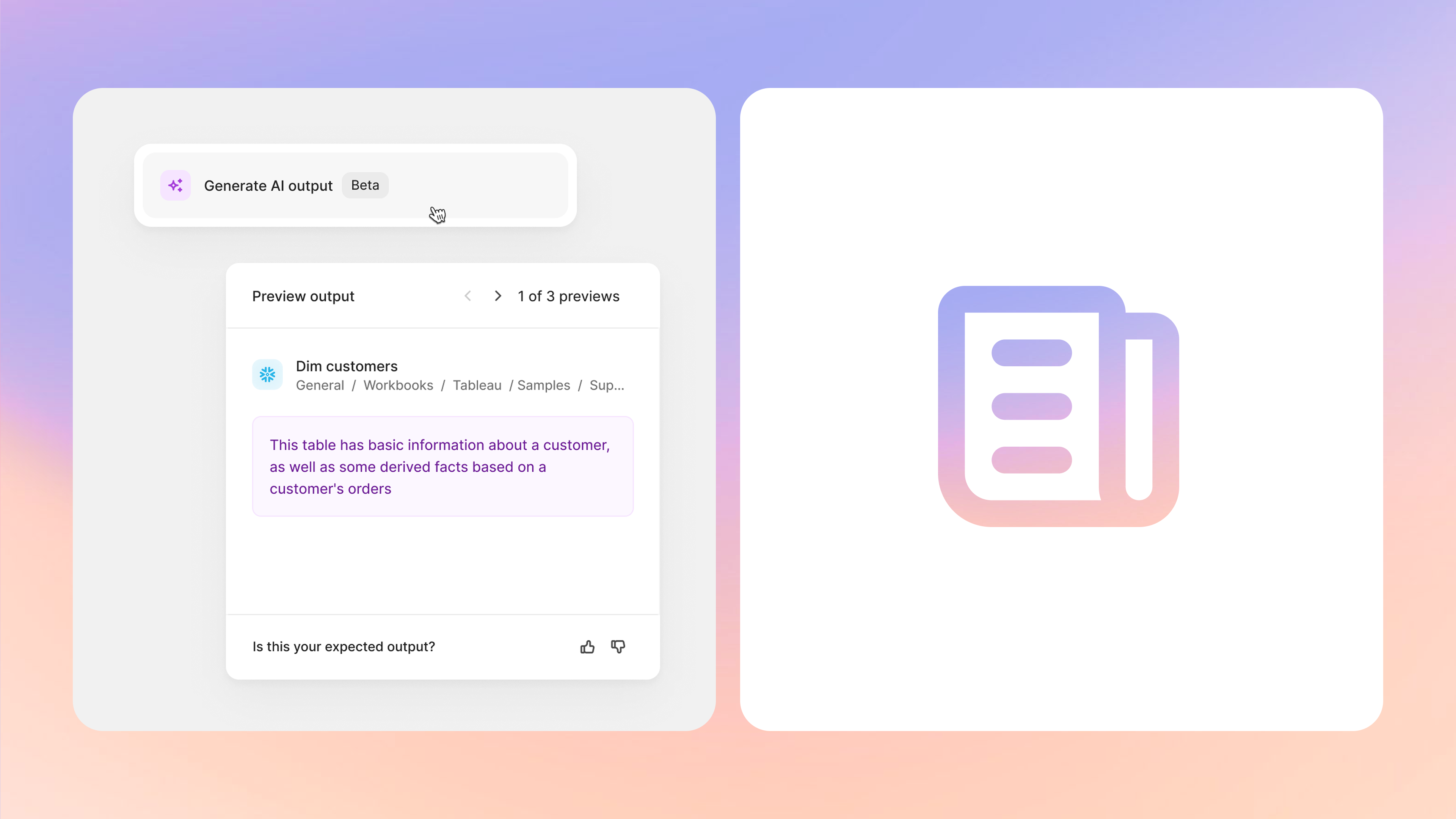

Secoda allows teams to auto-document assets using AI, propagate existing documentation, or import previous work using APIs. This becomes especially helpful when managing legacy systems or overlapping data pipelines.

A data catalog can also serve as a project management layer for your migration. It gives you a clear view of where each asset stands in the process, from planning and validation to final cutover. You can use metadata like tags, statuses, and ownership assignments to track progress across the stack.

Tracking progress and ownership

Secoda makes it easy to track which tables and models have been migrated, validated, or still need review. You can assign owners, apply tags, and use automations to update metadata at scale.

For teams running old and new environments in parallel, this visibility becomes even more valuable. You can use Secoda to validate changes across systems, confirm parity between source and destination, and establish confidence before fully switching over. Secoda also supports custom statuses and flexible metadata fields, which means you can treat your migration as a structured, trackable project. Instead of relying on disconnected spreadsheets or manual updates, your team has one place to document ownership, progress, and validation steps for every asset in the pipeline.

This level of coordination reduces confusion, helps ensure nothing is missed, and gives stakeholders a clear view of progress at every stage.

Strengthening governance during and after migration

When you’re juggling multiple systems, stakeholders, and timelines, governance can easily fall through the cracks. But keeping data secure, accessible, and understandable is just as important during a migration as it is after.

Secoda helps organizations maintain strong governance throughout the process by giving teams the tools to:

- Enforce access controls with role-based access and custom roles, through a streamlined access management portal

- Enforce data ownership using automations and policies

- Track how data flows across systems with lineage

- Monitor data health with quality checks and alerts

- Maintain accurate, up-to-date documentation across assets

- Stay compliant with data polices like HIPAA or GDPR

Because Secoda connects to your full stack, it becomes the central place to manage policy, visibility, and trust as your environment changes. Whether it’s ensuring sensitive data is properly tagged or surfacing stale assets, Secoda helps you stay in control without slowing things down.

Measuring adoption and value post-migration

After the migration, Secoda helps prove ROI. Usage metrics, query history, and popularity tracking make it easy to see which assets are being used and how often. It’s also a helpful way to see whether users are engaging with the newly migrated documentation or sticking with legacy sources.

Secoda can also serve as a checklist to measure completeness. As the single source of truth for documentation and metadata, Secoda allows you to track whether all key assets have been migrated, tagged, documented, and verified. This makes it easier to assess migration progress and identify any gaps in coverage.

Migrating from on-premise MySQL to BigQuery with Secoda

Let’s say you’re migrating two tables and a view from MySQL to BigQuery via Fivetran:

- authors

- posts

- title_author (a view dependent on the two tables)

Fivetran handles the raw tables, but the view must be rebuilt. This is a common scenario, especially when moving from OLTP systems like MySQL to an OLAP warehouse like BigQuery. Often, the destination schema needs to be restructured to support analytical use cases, and assets like views or models have to be rebuilt using transformation tools like dbt or Dataform.

Here’s how Secoda fits into that workflow:

- Scan and catalog metadata from both MySQL and BigQuery

- Compare schemas, row counts, and freshness across environments to validate parity

- Use lineage to identify upstream and downstream dependencies before deprecating or altering any sources

- Tag assets by migration status (e.g. ready, in progress, validated) to keep the team aligned

- Set up monitors to track row count, freshness, and anomalies post-ingestion

- Propagate documentation using AI and automations to ensure nothing is lost in translation

- Track adoption of newly migrated assets with usage metrics and popularity scoring

This level of structure helps you run both environments in parallel if needed, validate the migration before switching over, and minimize surprises during go-live. With everything tracked in one place, migrations become easier to manage and easier to trust.

How modern data teams manage complex migrations with Secoda

Several leading data teams have used Secoda to simplify their cloud migration process and reduce risk while scaling to new platforms.

Vanta used Secoda to track lineage throughout their Snowflake migration, helping them avoid downstream issues and ensure a smooth, disruption-free cutover. By surfacing usage insights, they also reduced dashboard migrations by over 80%, focusing only on the 125 dashboards that were still actively used. To preserve valuable context, Vanta leveraged Secoda’s API to carry over legacy documentation into Snowflake, saving time and eliminating redundant work.

Fullscript took a similar approach during their post-acquisition migration to BigQuery and dbt. With over 100 new data sources to integrate, the team used Secoda to keep everything organized and identify which models were still relevant. In their legacy environment, it was difficult to pinpoint where data issues originated. With Secoda’s monitors and lineage, they were able to proactively detect and resolve problems, building stakeholder trust and significantly reducing troubleshooting time.

TextUs streamlined their migration to BigQuery by using Secoda’s AI-powered documentation to fill in knowledge gaps. This helped reduce onboarding friction for new team members and ensured that documentation stayed consistent across both legacy and modern environments.

Whether it’s optimizing what to migrate, reducing manual overhead, or improving quality checks, these teams show what’s possible when migrations are managed in a structured, centralized way.

Final thoughts

Migrating to the cloud is only part of the journey. What happens after the migration is just as important. With Secoda in place, teams gain lasting visibility, trust, and efficiency across their data stack.

Here’s what success looks like after the cutover:

- Increased trust as teams proactively catch issues and maintain data quality through built-in monitors and alerts

- Higher adoption with documentation, lineage, and access centralized in one place for easy collaboration

- Improved visibility into what’s being used, what’s stale, and where to focus optimization

- Faster onboarding for new team members through AI-generated documentation and clear ownership

- Proven ROI from usage metrics and popularity tracking that show what assets are driving value

Secoda helps you maintain momentum after migration by giving your team a single platform to manage governance, quality, and collaboration at scale.

Curious to learn more? Book a demo to see how teams like yours migrated to the cloud with confidence.

.png)